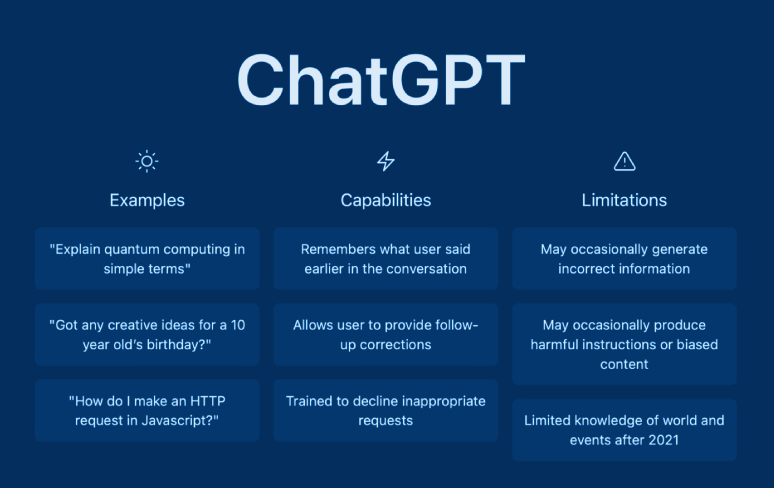

In an era where AI tools such as ChatGPT are capable of producing academic content, universities worldwide are leveraging AI detectors to uncover potential academic misconduct. However, these tools have sparked controversy among international students in Australia, who have raised concerns about the reliability of these AI detectors, fearing they may be wrongly accused of cheating.

The AI Detection Dilemma: Fears of Inaccuracy and Bias

Jia Li, an international student using a pseudonym, shared her experience with an AI detector that flagged more than half of her essay as AI-generated. The flagged content included sentences she wrote in Chinese and translated into English using a computer, as well as some she authored in English herself.

Li’s concern echoes a growing sentiment among international students who fear these tools may be unreliable and could lead to false accusations of academic dishonesty. A recent Stanford University study confirmed this worry by revealing that AI detectors might be unreliable and biased against non-native English writers.

The ‘Perplexity’ Factor: A Problematic Metric?

Stanford University’s Assistant Professor of Biomedical Data Science, James Zou, explained that many current AI detection algorithms heavily rely on a ‘perplexity’ metric, a measure of complicated words used in the text. This reliance often leads to the misclassification of non-native speakers’ writing as AI-generated, as they tend not to use as many complex words. Furthermore, the use of translation and grammar tools by non-native speakers can decrease the complexity of the writing, prompting detectors to flag such content as AI-generated.

Universities and AI Companies Respond

Reacting to these concerns, universities and AI companies alike have stated that the initial detection by these tools is not definitive proof of cheating but rather a trigger for further investigation.

For instance, the University of New South Wales (UNSW) in Sydney is using Turnitin’s new AI-writing detection tool. A spokesperson from UNSW emphasized that the tool’s findings do not automatically result in academic misconduct allegations but initiate a further probe.

Similarly, a spokesperson from ZeroGPT, one of the tools included in the Stanford study, maintained that their detector was accurate and not biased against non-native English writers, adding that the company was “always looking for ways to improve” its service.

Educational Management Perspective: A Call for Balance

Raghwa Gopal, CEO of M Square Media (MSM), an education management company, commented on the situation.

“It’s crucial to have a balanced approach towards the adoption of AI in education. While we understand the need for academic integrity, it’s equally important to ensure that these tools don’t unfairly penalize students, particularly those who are non-native English speakers,” he said.

Gopal further highlighted the importance of a broader dialogue involving all stakeholders, including students, educators, and AI tool developers, to improve the accuracy of these AI detectors and ensure they are culturally sensitive and inclusive.

“In the pursuit of preventing AI-assisted cheating, we must not overlook the basic principles of fairness and equity in education. It’s important to keep in mind that these tools are still developing and should be used responsibly, complementing, not replacing human judgment,” he added.

Navigating a Way Forward

The recent developments underscore the need for ongoing reassessment and refinement in the use of AI tools in education.

“As the field of AI continues to evolve, we need to continually reassess and refine the tools we use. It’s crucial that we work towards solutions that maintain academic integrity without compromising the trust and confidence of our student community,” Gopal concluded.

The current situation certainly presents a challenge for all parties involved—students, educators, universities and AI tool developers. Striking a balance between maintaining academic integrity and ensuring fair evaluation of student work, especially for those who are non-native English speakers, remains a critical task. The ongoing dialogue and commitment to refining these tools reflect a hopeful step towards a more equitable solution in the rapidly evolving landscape of AI-assisted education.

By Our Reporter

Get more stories from our website: Education News

You can also follow our social media pages on Twitter: Education News KE and Facebook: Education News Newspaper for timely updates.